Ever since Google expanded Webmaster Central to include a link analysis tool, we have been collecting the raw data to analyze for later purposes. Last month, we saw some February and March link data. This month, let's look at April and put it up against February and March.

This month, only three of the top ten most-linked to pages made Digg's front page:

- Yahoo! Removes Category (Directory) Links From Under Search Results

- Google Wins in Kinderstart Lawsuit

- Google Sending Out More Google Coolers to AdWords Advertisers

Tamar did an excellent job organizing the data for me to look at and analyze a bit more. Here is our most recent linkage data from Google Webmaster Central's link tool. One thing stands out about this data is that the first article has 15,426 more links than the second most link to article - that is huge. Anyway, here are the most linked to article, based on April's linkage data.

To remind you, here is the March linkage data that we posted in the past. There may look like some duplicates are posted, but trust me, this is how the data was exported. So even though, we have two articles listed separately, that is how we got it from Google in the CSV. Why? I do not know.

Here is the February data. You will see the top nine, because that is what we had at the time. Also, Google themselves told us that data was not 100% complete yet, so it may not be wise to judge this data in our analysis.

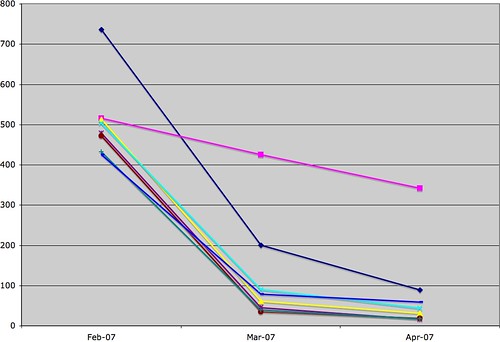

The first thing you can look at when you plot this on a chart, is that the as articles get older, they have less links (most of the time). It is understandable that new articles one rights, will attract a lot of fresh links. However, in many of the examples we listed above, the links have dropped more than half.

A quick line chart of the articles that had the top amount of links in February show the downward trend of links, as the article ages.

Why is this? Why do old articles have less links over time? Here are some possible ideas:

- Pages with the links are deleted

- Pages with the links are password protected

- Pages with the links are moved into the supplemental index

- Pages with the links are temporarily offline

But honestly, this did not satisfy me. First, does Google not count links that are from within the supplemental index? I was thinking about testing this but the problem is, we do not know how recent this data is. Google pushed us our linkage data, based on an archive copy of their data. A page can be in the supplemental index at any point, so it is hard to say, without a shadow of a doubt that a page was in the supplemental index at point A. We don't know when the links were calculated to come up with that evidence.

One weird observation was that the majority of the most linked to pages for the months above fell out on or around the same date. Five of the top nine articles on February's data was on the 25th or the 26th of December 2006. Six of the top ten articles from the March data set fell out on February 20th and 21st of 2007. And a majority of the April most linked to articles fell out on or around March 26th.

This seems to add more evidence that Google finds new links quickly and then doesn't count those links as time goes on. Maybe Google weeds out links after a certain amount of time? Maybe those links are dropped for the reasons listed above or maybe there is a new filter in place? I do not know. The data tells me that of the top articles from all the months export files, there is a 34.04% latency in the links over 1 month and a 17.67% latency over two months. But there are some outliers tend to skew those results.

One of the patent applications we linked to today is on Document Scoring Based on Link-Based Criteria that Matt Cutts helped to write. In that document, we see that it is possible Google may be looking at and filtering or scoring criteria such as:

- Rate of the disappearance of links to a document

- The time that takes

- The rate of links relative to the freshness of the document

- and so on... (I am not going to list them all out here

Is the list of links we see in these webmaster reports provided by Google valuable links? We know nofollowed links show up in the tool. So maybe there is zero filtering going on for the newer links? Maybe not. I just don't know.

I was hoping to learn more about how Google looks at links through these reports but I do not believe I have. Maybe I need to collect more data over time? But I think the main issue is that I do not have the time stamps of when the data was collected by Google. So it is incredibly hard to analyze this data. A month by month snapshot is great but not enough for us to derive any significant conclusions from.