I spotted a couple funny Google search results via the forums that I wanted to share with you.

The first one I spotted via a Google Blogoscoped Forum thread for a image search for [david cameron side profile]. David Cameron is the new Prime Minister of the United Kingdom, by the way. A search for that returns the following image either in the first or second position:

That is an image you would not expect for such a search!

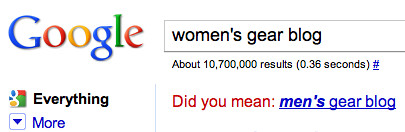

The next funny search result is how Google does their "did you mean?" response. Spotted via the Google Web Search Help, a search for [women's gear blog] returns a smart response, saying no, you are not looking for women's gear blog but rather a men's gear blog.

Forum discussion at Google Web Search Help & Google Blogoscoped Forum.