Back in the day, tracking how bots accessed your site was a bit of a crave. Now, you don't hear about it much. The old Google Analytics, aka Urchin, had a section for displaying bot activity on your site. It did this because Urchin also analyzed your log files, in addition to the method Google Analytics uses to track based on JavaScript. Since most spiders don't load JavaScript, popular analytical software, such as Google Analytics, won't track the bot activity.

To track bot activity, you need to use analytic software that analysis your log files. There are other methods, including writing your own database script to track all bot activity. Back in the day, Darrin Ward (founder of SEO Chat, who sold it years ago) created a script that looked for bot activity and stored the data in a MySQL database. I forgot the name of the software, but I bet it is still out there now or there are plenty of alternatives.

If you don't want to instal anything but you still want to track bot activity, there are ways.

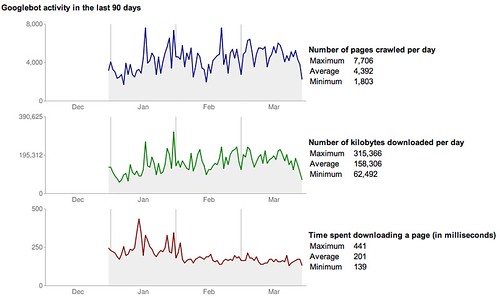

In Google Webmaster Tools, you can go to the "Crawl Stats" section under "Statistics" and get data from Google on how active GoogleBot is on your site. Google will show you data and time based graphs for:

- Number of pages crawled per day

- Number of kilobytes downloaded per day

- Time spent downloading a page (in milliseconds)

Here is a screen shot of our graphs:

If spiders are not crawling your site, you might have to worry. Otherwise, this is often a metric not discussed often by SEOs.

Forum discussion at HighRankings Forums.