I have a client with a very large database driven site. The site is extremely crawlable, which makes for a really nice amount of pages for very specific search terms. I cannot share the site I am talking about, because I do not have client approval. But I did want to share a new Google Webmaster Tools message that this client received, that, in a sense, warned the webmaster that Googlebot may "consume much more bandwidth than necessary."

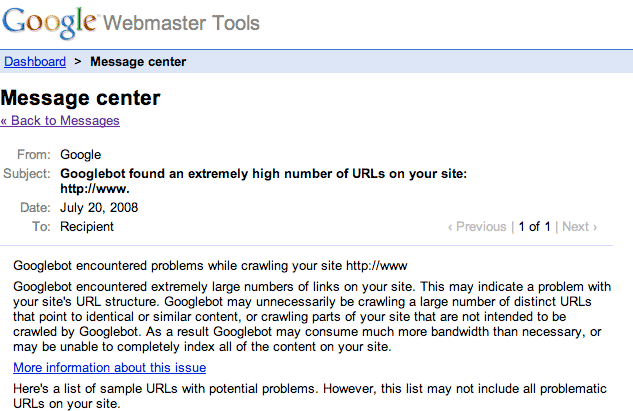

The subject line of the error reads: Googlebot found an extremely high number of URLs on your site

The body of the message reads:

Googlebot encountered problems while crawling your site http://www.domain.com/.Googlebot encountered extremely large numbers of links on your site. This may indicate a problem with your site's URL structure. Googlebot may unnecessarily be crawling a large number of distinct URLs that point to identical or similar content, or crawling parts of your site that are not intended to be crawled by Googlebot. As a result Googlebot may consume much more bandwidth than necessary, or may be unable to completely index all of the content on your site.

More information about this issue Here's a list of sample URLs with potential problems. However, this list may not include all problematic URLs on your site.

Here is a picture of the message:

Google goes on to list 20 or so URLs that they found to be problematic. A few of those URLs are 100% already blocked by the robots.txt file on the site, so I am not sure why they show up. The others, I can see why Google might consider them to be "similar content," but technically, they are very different pieces of content.

In any event, I had two major questions:

(1) Do you think this means Google will trust this site less? I don't think so. (2) To me, this makes me feel that Google is giving us the option of blocking these URLs or Google will simply drop them from the index. Google does this all the time already, dropping what they believe to be duplicate URLs. Why does this require a specific message? Does it mean that Google won't drop them but warns that the crawlers will crawl and your bandwidth will just spike?

I have never really seen a discussion on this specific Webmaster Tools message from Google, so let's start one. Please comment here or join the Search Engine Roundtable Forums thread.

Forum discussion at Search Engine Roundtable Forums.